Serviços Personalizados

Journal

Artigo

Indicadores

-

Citado por SciELO

Citado por SciELO -

Acessos

Acessos

Links relacionados

-

Similares em

SciELO

Similares em

SciELO

Compartilhar

Media & Jornalismo

versão impressa ISSN 1645-5681versão On-line ISSN 2183-5462

Media & Jornalismo vol.20 no.36 Lisboa jun. 2020

ARTIGO

Silent augmented narratives: Inclusive Communication with Augmented Reality for deaf and hard of hearing

Narrativas silenciosas e aumentadas: Comunicação inclusiva para surdos e deficientes auditivos através da realidade aumentada

Susanna Berra*,

https://orcid.org/0000-0001-7762-6405

https://orcid.org/0000-0001-7762-6405

Cláudia Pernencar**,

https://orcid.org/0000-0001-8981-2133

https://orcid.org/0000-0001-8981-2133

Flávio Almeida***

https://orcid.org/0000-0002-5228-8099

https://orcid.org/0000-0002-5228-8099

* Politecnico di Milano, Italy

** Instituto de Comunicação da Nova (ICNOVA), Portugal. Unidade de Investigação em Design e Comunicação (UNIDCOM), Portugal

*** Universidade Europeia. IADE - Faculdade de Design, Tecnologia e Comunicação Unidade de Investigação em Design e Comunicação (UNIDCOM), Portugal

ABSTRACT

Deafness is an often undervalued but increasing problem amongst the world’s population. Besides the difficulty in hearing sounds, it involves many cognitive and emotional issues, like learning difficulties, isolation, and disempowerment. In this paper, the design of artifacts for deaf people is analyzed from two different perspectives: Technology and Communication Science. Regarding technological issues, research shows how Augmented Reality can be applied using screen interfaces or smart glasses translating sounds into visual stimuli; looking at the communication possibilities, the focus is on storytelling and how it can be combined with the technology used to engage people, enhance learning activity and create a community. By looking into these two aspects, the suggested approach is to merge them in a conceptual creative project that can be appealing and useful to the public, through the use of interactive storytelling, while also using the visual benefits of an immersive Augmented Reality experience.

Keywords: augmented reality; hearing loss; inclusive communication; storytelling

RESUMO

A surdez é um problema muitas vezes desvalorizado, mas crescente entre a população mundial. Além da dificuldade em ouvir sons, envolve problemas cognitivos e emocionais, como dificuldades de aprendizagem, isolamento e incapacitação. Neste artigo, o design aplicado à surdez é analisado sob duas

perspetivas diferentes: a tecnologia e as ciências da comunicação. Em relação às questões técnicas, a investigação mostra como a Realidade Aumentada pode ser aplicada através de interfaces ou óculos inteligentes que transformam os sons em estímulos visuais; do ponto de vista da comunicação, o foco está na narração de histórias e como estas podem ser articuladas com a tecnologia mencionada para envolver as pessoas, aprimorando as atividades de aprendizagem e a ligação com a comunidade. Analisando esses dois aspetos, a abordagem aqui sugerida é juntá-los num projeto conceptual criativo que possa ser atraente e útil ao público, por meio da utilização de histórias interativas, ao mesmo tempo que são aproveitados os benefícios visuais de uma experiência imersiva com a Realidade Aumentada.

Palavras-chave: comunicação inclusiva; narrativa; perda de audição; realidade aumentada

1. Introduction

Communication is a crucial aspect inside a community: sounds, voices, images, gestures are key elements for people to express themselves and interact with their surroundings. However, not everyone is able to perceive all the content of this communication or reply with the same kind of stimuli. What if it was possible to obviate these lacks by adding another level to the reality when needed? This is what inclusive communication is trying to do, through the help of technology. In this context, the word “inclusive” refers to all the types of communication systems that are used to transmit information not just through written text or oral speech, but also through gestures, facial expressions, body language, pictures, signs, and objects. Communication should be accessible to everybody, regardless of disabilities or conditions that can prevent people from understanding written or pronounced words.

This paper outlines specifically the condition of deafness, where the lack of proper understanding of sounds has to be compensated by an increased amount of visual stimuli. Some technologies, in particular those with a tremendous visual impact such as Augmented Reality (AR), could be the key to add that extra layer on the reality that is missing because of the absence of auditory impulses. Moreover, AR can be used as a tool to engage people into new experiences, because human-to-human interaction is not only related with the transmission of information, but it also regards the ability to live together in society, sharing stories and experiences.

1.1 Hearing loss problematic

According to the World Health Organization (WHO), there are 466 million people in the world with disabling hearing loss (over 5% of the world’s population), and 34 million of these people are children. It is estimated that this number is going to double by 2050 (World Health Organization, 2019). Hearing loss can include a wide array of possible conditions, ranging from “hard of hearing” (people with hearing loss that can be mild, moderate or severe) to “deafness” (which implies a profound hearing loss, with very little or no hearing at all) (Blazer et al., 2016). For the deaf community, hearing loss is a condition, not a disability: for this reason, the term “hearing impairment” is considered offensive and to be avoided (National Association of the Deaf, 2019). Not all Deaf and/or Hard of Hearing (DHH) people present the same condition, symptoms, treatment or mode of communication: each person reacts differently to sounds, vibrations, spoken language, lip-reading, not considering the fact that sign language is not universal, but specific for every country. Moreover, communicating through sign language can be hard if a receiver is not familiar with this kind of messages, as it happens in most of the conversations between DHH and hearing people: the limited amount of possibilities existing in the aforementioned interaction is the main cause for problems such as loneliness, anxiety or social isolation (Kushalnagar, 2019).

While it is true that young people are generally less affected by hearing loss compared to elderly, it is also important to consider that deafness in children may reveal negative consequences on the child’s development and, for this reason, has to be addressed with special consideration. Potter et al. (2014) argued that deaf children generally have reduced literacy and slower academic progress, reduced social and emotional development, reduced empathy and a level of nervousness in novel situations, delayed language development, and limited or delayed spoken language. In his analysis, the author referred that deaf children are active and innovative in approaching communication, have sensitive visual attention in their peripheral vision enhanced attention to small visual changes, and a capacity for visual learning.

Inclusive communication, especially the one focused on non-verbal and visual stimuli, is taking into account the useful purpose of reaching every person, regardless of their specific problems, and can complement traditional verbal communication for the transmission of affective content (Walker & Trimboli, 1989). A focus on visual stimuli may also be useful for hearing people, because it helps to overcome the sound barrier between the hearing and the non-hearing community and to ensure full integration in the society.

Communication is a delicate issue for the large community of DHH people of all ages, but especially for the youngest ones: as mentioned above, if the missing auditory stimulus is not compensated for, the child’s learning capabilities and social skills could be severely compromised. From this main problematic, a state of the art involving two different perspectives on hearing loss, Technology and Communication Science, was studied. It is believed that an approach merging both fields inside a design project enhances the potential of inclusive communication for DHH people and improves the possibilities of integration with the hearing community. Regarding what was explained before, three research questions are presented in this paper:

1. In the technological area, is AR an useful tool for DHH people?

2. In the Communication Science field, is storytelling an effective method for inclusive communication?

3. Could using AR for interactive stories change/increase the potential of inclusive communication for DHH people, especially for young people?

2. AR and smart glasses

Finding the right technology to enhance inclusive communication is far from being a closed subject: in fact, it will probably be one of the biggest technological challenges regarding the future of communication[1]. The potential given by AR is to interact with reality in a new perspective, by overlapping a digital layer and managing the information through visual stimuli. Nowadays, the main interfaces on which it is possible to experience AR are smartphones and computers or tablet screens, which are easily available for everybody, or wearable interfaces. Since their origins, AR has been a common feature for head-mounted displays, devices worn by the users allowing them to see, through the application of stereoscopic systems, 3D objects as a superimposition on the reality. From one side, the possibility to overlap digital and real context directly in front of the user’s eyes, without an extra object like a smartphone, is a very interesting feature for non-verbal communication systems. On the other hand, however, those kinds of devices have not been vastly adopted yet, for reasons that space from technological issues to public hostility (Basoglu et al., 2017; Cipresso et al., 2018; Due, 2014). The first AR wearable interface that was diffused on a large scale, Google Glass, has been considered one of the biggest technological flops in history, and nowadays smart glasses are still far from being commonly accepted. However, they still have a very high potential and the continued research on this topic is very promising for future developments.

When AR is employed for helping people with different communicative conditions, also other technologies are necessary to compensate the lack of certain stimuli. Regarding hearing loss, the most significant ones are Automatic Speech Recognition (ASR), Text-to-Speech Synthesis (TSS), Audio-Visual Speech Recognition (AVSR), and Gesture Recognition (GR). ASR identifies human voice and process it into a readable text. It’s available in apps for smartphone or directly on head mounted displays. Vice versa, TSS converts written information into a speech: this feature is very useful in case of deaf-mute people or completely deaf people that have difficulties in articulate understandable sounds. Attempts of joining the auditory with the visual part have led to AVSR, a system that improves speech recognition by combining audio data with visual ones, like facial expression, also in real time (Mirzaei et al., 2014). Taking advantage of the visual part, GR specifically allows the system to recognize hands gesture and read them accordingly. The main system that works with it is Leap Motion[2], which is usually embedded in the new generation of head-mounted displays for AR. Another way to use GR, but without a wearable device, is Microsoft Kinect, which is able to track hand and body movements. The main issue with sign language, however, is that it includes much more than simple hand gesture: it is a combination of facial, body, and hands movements assuming a certain meaning inside the context of a specific sentence.

For this reason, GR is still not able to properly translate any sign language in a text or speech, but recent scientific studies have evidenced crucial progress in this field and the issue referred will be most probably solved in a not so far future (Al-Shamayleh et al., 2018) 2.1 AR for deaf and hard of hearing

When considering the problem of communication as simply an obstacle in the transmission of information, there are many different applications in which AR, especially for immersive devices as smart glasses, has been used to help DHH people. While many of them still remain developed only at a theoretical level, some have reached the market and are actually starting to be diffused. To highlight the potential of the AR topic in helping with deafness, some examples, divided into categories according to their purpose, the field of application, and the technology used, are presented below.

2.1.1 Talking

The main purpose of most of the devices designed for hearing loss is to ease the conversation between a DHH and a hearing person that is not familiar with sign language. Artifacts in this category generally work with ASR and AVSR, so the device is able to translate the hearing person’s speech into words that are displayed on the lenses of AR glasses (Mirzaei et al., 2014). Speech recognition technology can be incorporated inside the smart glass itself or featured by an app on the second person’s phone.

Google Glass was the first device offering this possibility, in 2014, with the Captioning on Glass[3] project: the hearing person talks into a smartphone and the DHH person read the sentence on the lenses of Google Glass (Figure 1). The main concept of this device is to provide a conversation as smooth as possible: the presence of a wearable interface is essential because it allows people to look at each other while talking, greatly improving the overall connection during the conversation. However, there are issues which should be questioned. The first is the necessity of wearing a device, in this case Google Glass: the presence of smart glasses on people’s face is still not completely accepted, and it seems something that identifies people as “different”, enhancing the discomfort of the situation. Secondly, for a DHH person, as it would be for a hearing person, making conversation by responding to a written text is more mechanical and not as natural as communicating in their language (sign language) would be. Moreover, the hearing person needs to have a special app on the smartphone and connect it to the device: all these obstacles strongly discourage a spontaneous conversation. To avoid the scenario of having a phone connection, new generation of smart glasses have ASR functions included. However, a study from 2018 tested one of them (Epson Moverio BT-200) and found out that while the general results were positive compared to smartphone applications, the glasses were still lacking some accuracy and processing speed. This evidence implies that glasses’ features need to be improved for a really effective experience (Watanabe et al., 2018).

2.1.2 Enjoying

The second category doesn’t address directly human-to-human conversation, but mostly individual interaction with entertainment or educational material. In this case, ASR technology is not needed, because the text or the sign language video is pre-loaded on the device and just displayed at the right time. A recent example of this scenario is the application of Epson Moverio BT-300[4] inside of the National Theatre of London[5] (Figure 2). The system presented allows DHH people or whoever has difficulties in following the play to read the subtitles directly on their glasses’ lenses, following the person’s gaze, wherever their eyes turn. Surprisingly, this project has been diffused in theatres and was very positively received by both DHH and hearing public. However, a broader diffusion is expected in the near future. Similar applications have also been designed for other kinds of exhibitions: e.g., Planetariums[6], places traditionally avoided by DHH people for the difficulty in looking simultaneously at the projection of the stars and at the interpreter. In this case, the AR interface would not be showing subtitles, but the video of the interpreter translating the auditory comment. In both examples, the presence of “another layer” on top of reality compensates the lack of sound, even if the experience is always dependant on pre-recorded text or video. Nonetheless, it is relevant to understand how different environments started to deal with inclusive communication. The progressive introduction of the smart glasses with AR means that these kinds of devices are being more and more accepted into (and requested by) society.

2.1.3 Learning

In recent years, researchers have often investigated how to design a context in which DHH students, of any age, can follow mainstream classes as well as hearing students (Almutairi & Al-Megren, 2017; Al-Megren & Almutairi, 2019; Deb et al., 2018; Ioannou & Constantinou, 2018; Miller et al., 2017; Parton, 2017). As mentioned in the introductive section of this article, DHH students are considered visual learners: for them, images and gestures - sign language - assume a stronger meaning rather than written text. Regarding this issue, a recent study (Ioannou & Constantinou, 2018) explored the use of AR glasses to facilitate the communication between DHH learner and instructor, and “how the use of AR via a typical smart device (smartphone or tablet) can support DHH students with acquiring new vocabulary and understanding complex texts” (Ioannou & Constantinou, 2018, p. 392). The authors showed positive results in both cases. Almutairi and Al-Megren conducted a research to understand what kind of stimuli (sign language, pictures, videos, fingerspelling) are useful for DHH Arab children’s literacy development. In 2017 the preliminary studies were published, while in 2019 the authors revealed a more complete collection of the results, underlying first of all the importance that exists in integrating several stimuli during the learning process, and consequently their design of an AR application to aid DHH Arab children in their linguistic development (Almutairi & Al-Megren, 2017; Al-Megren & Almutairi, 2019).

Analysing adult target, devices as the one used by the National Theatre are useful because they allow students to follow lectures without having to switch their eyes between the teacher, the notebook and the sign language interpreter: studies have shown that displaying the sign language video directly on the smart glasses lenses with AR reduces the effects of visual field switches and makes the lecture easier to follow (Miller et al., 2017).

It is not yet known a project, on a big scale, where the previously explained reality has been applied, apart from demos and small simulations, mostly due to technological issues of accuracy, because of the devices involved cost and, again, for the lack of social acceptance of wearable devices. Studies focused on teenagers target proved that, however useful the system was, the student was uncomfortable in wearing some device inside the class, because it was “not stylish enough”: the device marked them as different (Ioannou & Constantinou, 2018, p. 385). However, the recurrent presence of this topic inside the research field is a good starting point for a possible future change in this situation.

3. Effective communication through narrative

Communication is not simply the transmission of information between two people. It involves a deeper nuance, constructed by social relations, shared experiences, emotional connections. Too much credit is given to spoken words when actually a large part of the interpersonal communication, even between two hearing people, is done non-verbally. The most effective way to transmit a message is not always to recognize a basic sentence from a language, translate it into another and just deliver the final result to the receiver: it can surely be effective for basic levels of human communication, but the use of contextualization through storytelling improves dialog for human-to-human, interface to human and media to human interaction. While from the technical point of view, as explained in the previous paragraphs, the scientific community has been focused on the development of a set of technology affecting basic needs, Communication Science researchers have developed a specific interest in the application of interactive storytelling to improve the quality of the experience and, as a consequence, the time and the energy that people are willing to spend on the device (Jenkins et al., 2013; Piredda et al., 2015). The existing lack related with the diffusion of most of the examples shown in the previous paragraphs is also due to the fact that the experience beyond the physical interaction has often not been considered enough.

The authors of this article wonder why and how storytelling could be important for the DHH community. When dealing with hearing loss, it is crucial to remember that the problematic is not only related to physical conditions, but it comes also from the affective and emotional issues. Learning difficulties, social exclusion, and distress are some of the possible consequences regarding the lack of proper communication with one’s peers. Using narratives as a form of social communication has been proven to be very efficient as a way to facilitate the emotional participation of the audience and enhance learning abilities (Jenkins et al., 2013).

A possible role of storytelling in the DHH community has been suggested by deaf journalist Trudy Suggs. In “The importance of storytelling to address deaf disempowerment” article (Suggs, 2018), the author pointed out the need of sharing common knowledge about deafness issues and related problems, first of all, the disempowerment originating from the severe lack of data and resources available for DHH people’s instruction. It was also suggested that sharing stories between each other and with other people, such as interpreters, is a way of collecting distinctive points of view that are useful to develop solutions, allowing DHH people to achieve their power (Suggs, 2018).

The conducted literature review showed that storytelling projects for DHH people exist, but they are mostly limited to a very basic use of technology and social media, with stories shared in the form of videos in sign language or written testimonies, digital or printed, about individual experiences. The narrative theme, however, shows a lot of potential joined with the use of technology.

3.1. Telling stories with AR

Inside the intricate field of communication through storytelling, AR provides new tools to apply and spread narrative techniques in a more effective way. In particular, research focused on the possibility of mixing real and virtual components to increase the experience, reinforcing the surroundings instead of neglecting it: according to Azuma (2015), this feature is what makes storytelling through AR really meaningful and powerful.

The following paragraphs will analyze, through different examples, how Communication Science has involved AR technologies in order to build effective storytelling products.

3.1.1 Location-based narratives

As mentioned before, one of the strongest AR storytelling’s features is the capacity to join real and virtual to create an entertaining and educational experience. The main purpose of location-based narratives is to reinforce the reality by joining with it new digital content or remembering past events connected to the place itself (Azuma, 2015). There are many examples of this category. Some stories can be used to increase the engagement of a real journey by train (Fantasia Express: an AR storytelling on trains[7]) or boat (The Odissey: an AR storytelling on boats[8]), or just for visiting a place (Secret Coast: Augmented Reality Storytelling[9] - Figure 3), by adding stories to the existent landscape. Others can transform an ordinary environment, like the house, in a magic world for children to interact with (Wonderscope[10] - Figure 4).

The examples above present a common path, all use the smartphone as the main interface, but the experience of looking to a real place through the screen of a mobile device can be constricting and annoying. It is believed that the overall experience could be improved by the use of a more immersive interface like smart glasses, that allow looking into an augmented surrounding as if users were experiencing the reality itself. Taking advantage of that scenario, it would be relevant to see research focused on the development of location-based storytelling with the use of wearable devices, when and if smart glasses will become more diffused, socially acceptable, and technologically accurate. An example of location-based narrative without the use of a smartphone is Story Scopes[11], by Immersive Storylab: through public viewing telescopes (adapted to display AR), people look into the stories emerging from the surrounding lake. This experience happens on real-time because people can look through a device which they would normally use to see around, but with the addition of digital content. The device in particular has the capacity to be adapted for larger groups of people through the use of holograms.

3.1.2 Collaborative narratives

The research conducted points to the possibility of enjoying a story also with other people, interacting together and creating a community, as one of the important features of AR-based narratives. In fact, AR enhances story sharing and increases the opportunity of co-creation, resulting in a reinforced sense of community between the people involved. Studies showed that the collaboration as a method of designing new stories is an effective way to share the experience and improve the sense of presence (Gironacci, Mc-Call, & Tamisier, 2017). Some location-based narratives are also designed with this purpose: e.g., in 110 Stories[12] (Figure 5) the augmented skyline of New York is an opportunity for people to share their stories about the missing elements, the Twin Towers. What is real is merged with a digital representation of collective memory and becomes an opportunity to strengthen the community around shared experiences.

3.1.3 Learning narratives

Interactive storytelling has been adopted to engage children’s creativity, enhance their learning processes, and stimulate the collaboration with others (Yilmaz & Goktas, 2017). Particularly, collaborative teaching strategies presents more effective results because of the interdependent and differentiated roles given to participants. Regarding this topic, some research projects are using storytelling in different contexts and with various age targets, with generally positive results presented (Bressler & Bodzin, 2013; Winzer et al., 2017). Educational AR projects, as locationbased ones, usually involve the use of smartphones or tablets to run AR applications or to read AR books (texts in which the story is delivered both by physical media, the book itself, and the virtual one, the digital content unlocked with the application and connected to the book’s pages).

3.2 Narratives for inclusive communication

Interactive storytelling is particularly interesting for its applications in the field of inclusive communication. Given its visual nature, an AR-based narrative works well for physical or mental conditions that lead people to struggle with traditional verbal communication, and also for children that have difficulties in following the mainstream learning systems. A disorder like Autism, e.g., doesn't directly involve verbal communication issues but prevents children from being aware of emotional and interpersonal cues, resulting in difficulties in everyday social interactions. For them, inclusive communication means being able to merge the content of a common speech with all the indirect information that are usually provided by social context and body attitude, that are harder to detect for an Autistic child. Different studies applied AR narrative techniques to help with this problematic, and the general research response seems to be very positive (Chen et al., 2015; Ip et al., 2018; Lee et al., 2018): these systems were effective in teaching children how to recognize, understand and respond to social cues. The reasons why the current study can actually be linked not only to Autism but also to other kinds of communication impairments is because AR is a bridge between real-world activities and digital experience: 3D animation joined with real spatial information in different scenarios helps stimulate children’s mental skills, because they can directly see what’s happening without the need to translate written words or 2D images into real-life situation (Lee et al., 2018).

4. Concept project and discussion

As shown in the previous sections, the deafness problem has been addressed in the technology field mostly by focusing on how to find visual stimuli to translate what the DHH person is not able to hear. Meanwhile, Communication Sciences have tested the importance of systems based on storytelling to share a different kind of knowledge and to engage people on a deeper level. Based on the analysis of both situations, this paper suggests a possible approach of how to design communication experiences for DHH people using the technological media offered by AR (especially if immersive, with wearable devices) to deliver a broader message, including also sharing stories and engaging people into understanding each other’s culture and problems. Joining the positive effects of the two categories, AR and storytelling, it is believed that the outcome has the possibility of helping DHH people, especially the youngest, in the difficult task of communicating and integrating with the hearing community. In contrast, this approach has some challenges to face. Firstly, there are technical problems: AR is still struggling with many issues, especially regarding smart glasses. The reason why they are not yet a commercial success, as smartphones and screenbased interfaces are, is due to different factors: technological development (having to include advanced hardware into an object that can be easily worn); the impact that they have on society (mostly not accepted in public spaces); the lack of affordable options (making their spread difficult) (Due, 2014). The issues referred before are partially overlooked because smart glasses are rapidly evolving technology, and the progress done recently can be a solid basis to say that today’s problems could easily be solved in the span of some years.

The technological-driven projects, as shown above, remain mostly on paper not just for technical issues, but because the value that they offer is not deep enough to justify the employment of high-level technologies and the User Experience (UX) is still problematic. By working deeply on the communication level, they could become more appealing to a broader public. The only really successful product (from a marketing point of view), between the ones mentioned in the second paragraph, is the smart glasses application in the National Theatre, because it works on people’s interest in being entertained: AR is an effective media to transmit a story and the fact that both DHH people and hearing people can enjoy it makes the product really inclusive. Instead, on the communication side, storytelling projects are generally not useful for the deaf community if they are not specifically designed to share their stories, but the ones that do it don’t take advantage of technological breakthroughs that could actually be useful to reach their purpose. By taking in consideration the two aspects of the problem, we believe the result could be a project that is appealing and useful to the public (using storytelling to engage and entertain), but also technically innovative (with the positive outcome of AR: adding another layer to reality to compensate what is missing from auditory stimuli).

4.1 Space for Signs - A concept project

This article is a part of the theoretical study included in the concept project designed by the main Author[13]. The Design Process has been structured in the following way:

1. Framing of the design problems;

2. Mapping the empirical research;

3. Definition of the brief;

4. Identification of the target and creation of profiles;

5. First concept hypothesis about what technology and content could fulfill the design brief.

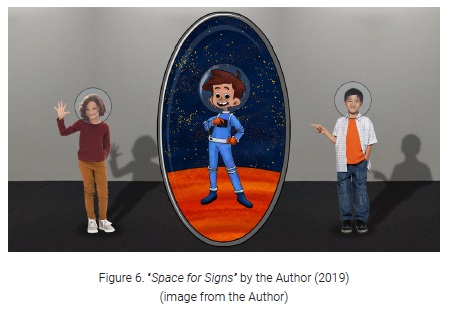

After the definition of the aforementioned design questions and state-of-the-art phases, the concept purpose for the project was defined: an interactive game for children to teach about non-verbal communication, focusing on DHH people and sign language. Consequently, with the performed analysis, we defined the project’s target as children between 8 and 10 years old, both DHH and hearing, for which we suggested two possible personas[14]: a 10-years-old hearing boy and an 8-years-old deaf girl. From these personas’ want, needs, and pain points we proceeded in better framing the initial concept: a storytelling-based interactive game (Space for Signs) for children between 8 and 10 years old to teach sign language.

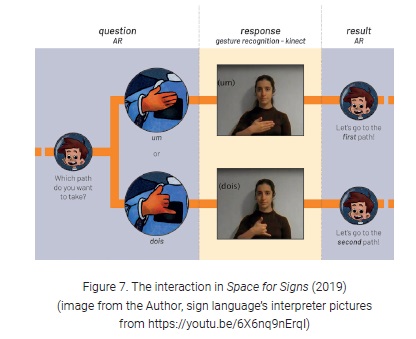

In the story, a digital character, the astronaut “Cosmo” (Figure 6), interacts with the kids, giving them instructions about what gesture they should perform in order to communicate with sign language. Through their hands movements, detected by a Kinect (Figure 7), children can influence the story of “Cosmo” and help him to explore the planet he crashed into. The storytelling part behind keeps the children engaged in the learning activity, while AR allows them to communicate in a real environment with the digital character teaching them.

According to Levin-Gelman (2014), features like complex but feasible challenges (in this case, communicating with a digital character in an alternative way) and contextual help in case of failure (the character “Cosmo” is there to help and guide them through the story) are some of the most important features when designing a game for 8 to 10 years old children.

The game is designed for DHH children that attend mainstream schools and for their hearing classmates, to help the integration and to increase knowledge about the different languages (spoken and signed): for this reason, it has to be played by more kids at the same time, ensuring that they can go on with the story through collaboration and social interaction.

Further steps in the Design Process would be an accurate observation of the selected target, to specify the user’s needs and want in terms of UX that, as pointed out by Levin-Gelman (2014), is especially important when dealing with a very young target. The project would have to be followed by a series of prototypes and testing both for the technological part (management of the technical components: cameras, Kinect, AR) and the content part (understanding, with the help of learning specialists, which are the signs used to teach and within which kind of story should be inserted).

Conscious about the long road that would lie ahead, our purpose within this paper is not to submit results about a finished project, but to present an empirical study that may, hopefully, open a path for other researchers to follow.

Conclusions

When designing for DHH people, relevant facts have to be considered. The physical issue of hearing loss can lead to many cognitive and emotional issues, from learning difficulties to real problems in social inclusion. The research showed that it is possible,

with the use of AR technology, to provide some help in dealing with hearing loss, but the technology by itself is not enough when facing complex scenarios. The use of storytelling as an emphatic way of communication and as an interactive method of sharing information has already yielded effective results in many different applications, especially when it’s joined with AR. Highlighting an effective inclusive approach, that merges the technical and the expressive field, can result in more useful and relevant projects that cover the different physical and emotional needs of DHH people. It should be noted that there is a very important limitation of the present research, which is the lack of a practical application of what has been theorized. This study provides only a preliminary perspective on how AR and storytelling could be applied to improve the design for people with hearing loss. However, the potential shown by researching this topic is worth of future analysis.

REFERENCES

Abas, H., & Badioze Zaman, H. (2011). Visual Learning through Augmented Reality Storybook for Remedial Student. In H. B. Zaman, P. Robinson, M. Petrou, P. Olivier, T. K. Shih, S. Velastin, & I. Nyström (Eds.), Visual Informatics: Sustaining Research and Innovations (pp.157-167). Berlin, Heidelberg: Springer Berlin Heidelberg. [ Links ]

Al-Megren, S., & Almutairi, A. (2019). User requirement analysis of a mobile augmented reality application to support literacy development among children with hearing impairments. Journal of Information and Communication Technology, 18(1), 97-121. Retrieved from http://www.jict.uum.edu.my/images/vol18no1jan19/97-121.pdf [ Links ]

Almutairi, A., & Al-Megren, S. (2017). Augmented Reality for the Literacy Development of Deaf Children: A Preliminary Investigation. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility, 359-360. DOI: 10.1145/3132525.3134789. [ Links ]

Al-Shamayleh, A. S., Ahmad, R., Abushariah, M. A. M., Alam, K. A., & Jomhari, N. (2018). A systematic literature review on vision based gesture recognition techniques. Multimedia Tools and Applications, 77(21), 28121-28184. DOI: 10.1007/s11042-018-5971-z. [ Links ]

Azuma, R. (2015). Location-Based Mixed and Augmented Reality Storytelling. In W. Barfield (Ed.), Fundamentals of Wearable Computers and Augmented Reality, Second Edition, (pp. 259-276). Oxforshire: CRC Press. DOI: 10.1201/b18703-15. [ Links ]

Basoglu, N., Ok, A. E., & Daim, T. U. (2017). What will it take to adopt smart glasses: A consumer choice based review? Technology in Society, 50, 50-56. DOI: 10.1016/j.techsoc.2017.04.005. [ Links ]

Blazer, D. G., Domnitz, S. and Liverman, CT. (2016). Hearing Loss: Extent, Impact, and Research Needs. Washington (DC): National Academies Press (US). Retrieved from https://www.ncbi.nlm.nih.gov/books/NBK385309/

Bressler, D. & Bodzin, A. (2013). A mixed methods assessment of students’ flow experiences during a mobile augmented reality science game. Journal of Computer Assisted Learning, 29(6), 505-517. DOI: 10.1111/jcal.12008. [ Links ]

Chen, CH., Lee, IJ., & Lin, LY. (2015). Augmented reality-based self-facial modeling to promote the emotional expression and social skills of adolescents with autism spectrum disorders. Research in Developmental Disabilities, 36, 396-403. DOI: 10.1016/j.ridd.2014.10.015. [ Links ]

Cipresso, P., Giglioli, I., Raya, M. & Riva, G. (2018). The Past, Present, and Future of Virtual and Augmented Reality Research: A Network and Cluster Analysis of the Literature. Frontiers in Psychology, 9, 2086-2018. DOI: 10.3389/fpsyg.2018.02086. [ Links ]

Deb, S., Suraksha, & Bhattacharya, P. (2018). Augmented Sign Language Modeling (ASLM) with interaction design on smartphone - An assistive learning and communication tool for inclusive classroom. Procedia Computer Science, 125, 492-500. DOI: 10.1016/j.procs.2017.12.064.

Due, B. (2014). The future of smart glasses: An essay about challenges and possibilities with smart glasses. Working Papers on Interaction and Communication, 1(2), 1-21. Retrieved from https://circd.ku.dk/images/An_essay_about_the_future_of_smart_glasses.pdf [ Links ]

Gironacci, I., Mc-Call, R. & Tamisier, T. (2017). Collaborative Storytelling Using Gamification and Augmented Reality. In Luo Y. (Ed.), Cooperative Design, Visualization, and Engineering. 14th International Conference CDVE 2017. Lecture Notes in Computer Science, vol. 10451, 90-93. Springer, Cham. [ Links ]

Godwin-Jones, R. (2016). Augmented reality and language learning: From annotated vocabulary to place-based mobile games. Language Learning & Technology, 20(3), 9-19. Retrieved from http://llt.msu.edu/issues/october2016/emerging.pdf [ Links ]

Howerton-Fox, A. & Falk, J. (2019). Deaf Children as “English Learners”: The Psycholinguistic Turn in Deaf Education. Educ. Sci, 9(2), 133. DOI: 10.3390/educsci9020133. [ Links ]

Ioannou, A. & Constantinou, V. (2018). Augmented Reality Supporting Deaf Students in Mainstream Schools: Two Case Studies of Practical Utility of the Technology. In Auer M., Tsiatsos T. (Eds.), Interactive Mobile Communication Technologies and Learning. IMCL 2017. Advances in Intelligent Systems and Computing, vol 725, 387-396. Springer, Cham. [ Links ]

Ip, H., Wong, S., Chan, D.., Byrne, J., Li, C., Yuan, V. S. N., … Wong, J. (2018). Enhance emotional and social adaptation skills for children with autism spectrum disorder: A virtual reality enabled approach. Computers & Education, 117, 1-15. DOI: 10.1016/j.compedu.2017.09.010. [ Links ]

Jenkins, H., Ford, S., & Green, J. (2013). Spreadable Media: Creating Value and Meaning in a Networked Culture. New York: NYU Press. [ Links ]

Jofré, N., Rodríguez, G., Alvarado, Y., Fernández, J., & Guerrero, R. A. (2016). Non-Verbal Communication for a Virtual Reality Interface. In XXII Congreso Argentino de Ciencias de la Computación (CACIC 2016). Retrieved from http://hdl.handle.net/10915/55807 [ Links ]

Korte, J., Potter, L. & Nielsen, S. (2017). The impacts of deaf culture on designing with deaf children. In Proceedings of the 29th Australian Conference on Computer-Human Interaction (OZCHI’17). Association for Computing Machinery, New York, NY, USA, 135-142. DOI: 10.1145/3152771.3152786.

Kushalnagar, R. (2019). Deafness and Hearing Loss. In Yesilada Y., Harper S. (Eds.), Web Accessibility. Human-Computer Interaction Series (pp.35-47). London: Springer. DOI: 10.1007/978-1-4471-7440-0_3. [ Links ]

Lee, I.-J., Lin, L.-Y., Chen, C.-H., & Chung, C.-H. (2018). How to Create Suitable Augmented Reality Application to Teach Social Skills for Children with ASD. In State of the Art Virtual Reality and Augmented Reality Knowhow. London: IntechOpen. DOI: 10.5772/intechopen.76476. [ Links ]

Levin-Gelman, D. (2014). Design for Kids: Digital Products for Playing and Learning. New York: Rosenfeld Media; Beverly, Massachusetts. [ Links ]

Lidwell, W., Holden, K., & Butler, J. (2003). Universal Principles of Design. Beverly: Rockport Publishers, Inc. [ Links ]

Malzkuhn, M., & Herzig, M. (2013). Bilingual storybook app designed for deaf children based on research principles. In Proceedings of the 12th International Conference on Interaction Design and Children (IDC ’13). Association for Computing Machinery, New York, NY, USA, 499-502. DOI: 10.1145/2485760.2485849.

Milgram, P., & Kishino, F. (1994). A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Information Systems, E77-D, (12), 1321-1329. Retrieved from https://cs.gmu.edu/~zduric/cs499/Readings/r76JBo-Milgram_IEICE_1994.pdf [ Links ]

Miller, A., Malasig, J., Castro, B., Hanson, V. L., Nicolau, H., & Brandão, A. (2017). The Use of Smart Glasses for Lecture Comprehension by Deaf and Hard of Hearing Students. In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems (CHI EA ’17). Association for Computing Machinery, New York, NY, USA, 1909-1915. DOI: 10.1145/3027063.3053117.

Mirzaei, M. R., Ghorshi, S., & Mortazavi, M. (2014). Audio-visual speech recognition techniques in augmented reality environments. Vis Comput, 30(3), 245-257. DOI: 10.1007/s00371-013-0841-1. [ Links ]

Nam, Y. (2015). Designing interactive narratives for mobile augmented reality. Cluster Computing, 18(1), 309-320. DOI: 10.1007/s10586-014-0354-3. [ Links ]

National Association of the Deaf (2019). Community and Culture. Retrieved from https://www.nad.org/ [ Links ]

Parton, B. S. (2017). Glass Vision 3D: Digital Discovery for the Deaf. TechTrends, 61(2), 141-146. DOI: 10.1007/s11528-016-0090-z. [ Links ]

Pavlik, J. & Bridges, F. (2013). The Emergence of Augmented Reality (AR) as a Storytelling Medium in Journalism. Journalism & Communication Monographs, 15(1), 4-59. DOI: 10.1177/1522637912470819. [ Links ]

Piredda, F., Ciancia, M., & Venditti, S. (2015). Social Media Fiction. In Schoenau-Fog H., Bruni L., Louchart S., Baceviciute S. (Eds.), Interactive Storytelling. ICIDS 2015. Lecture Notes in Computer Science, 944, 309-320. DOI: 10.1007/978-3-319-27036-4_29. [ Links ]

Potter, L. E., Korte, J., & Nielsen, S. (2014). Design with the Deaf: Do Deaf children need their own approach when designing technology? In Proceedings of the 2014 conference on interaction design and children (IDC’14), 249-252. New York: Association for Computing Machinery. DOI: 10.1145/2593968.2610464. [ Links ]

Schioppo, J., Meyer, Z., Fabiano, D., & Canavan, S. (2019). Sign Language Recognition: Learning American Sign Language in a Virtual Environment. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (CHI EA ’19). Association for Computing Machinery, New York, NY, USA, Paper LBW1422, 1-6. DOI: 10.1145/3290607.3313025.

Suggs, T. (2018). The importance of storytelling to address deaf disempowerment. In Holcomb, T. & Sith, D (Ed.), Deaf Eyes on Interpreting (pp.9-19). Washington: Gallaudet University Press. [ Links ]

Walker, M. & Trimboli, A. (1989). Communicating Affect: The Role of Verbal and Nonverbal Content. Journal of Language and Social Psychology, 8(3-4), 229-248. DOI: 10.1177/0261927X8983005. [ Links ]

Watanabe, D., Takeuchi, Y., Matsumoto, T., Kudo, H., & Ohnishi, N. (2018). Communication Support System of Smart Glasses for the Hearing Impaired. In Miesenberger K., Kouroupetroglou G. (Eds.), Computers Helping People with Special Needs (pp.225-232). ICCHP 2018. Lecture Notes in Computer Science, vol 10896. Cham: Springer. [ Links ]

Winzer, P., Spierling, U., Massarczyk, E., & Neurohr, K. (2017). Learning by Imagining History: Staged Representations in Location-Based Augmented Reality. In Dias J., Santos P., Veltkamp R. (Eds.), Games and Learning Alliance (pp.173-183). GALA 2017. Lecture Notes in Computer Science, vol 10653. Cham: Springer. [ Links ]

World Health Organization. (2019). Deafness and hearing loss. Retrieved from https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss [ Links ]

Yilmaz, R. M., & Goktas, Y. (2017). Using augmented reality technology in storytelling activities: Examining elementary students’ narrative skill and creativity. Virtual Reality, 21(2), 75-89. DOI: 10.1007/s10055-016-0300-1. [ Links ]

Acknowledgements

We would like to give a special thanks to IT People company that helped all students from the MSc Visual Culture from IADE - Faculty of Design, Communication and Technology from European University clarifying technical issues regarding their project concepts.

Received | Submetido: 2019.08.29. Accepted | Aceite: 2020.04.20

Bibliographical notes

Susanna Berra is a student at Politecnico di Milano, Master Degree in Digital and Interaction Design. She obtained her Bachelor Degree summa cum laude in Architecture, at Politecnico di Milano. Her studies are mainly related to User Experience Design, with a focus on storytelling strategies.

Email: susannaberra@libero.it

Address: Politecnico di Milano. Piazza Leonardo da Vinci 32, 20133 Milano | Italy

Cláudia Pernencar é doutorada em Medias Digitais pela NOVA FCSH em colaboração com o Programa Austin Portugal. Consultora na área do Design para a Saúde e Bem-Estar. Leciona nas áreas do Design de Interação e Design de Interfaces.

Ciência ID: 4716-A442-2BB2. Scopus Author ID: 56025620000 Email: claudiapernencar@fcsh.unl.pt

Morada institucional: ICNOVA Instituto de Comunicação da NOVA. Colégio Almada Negreiros. Campus de Campolide, Gab. 348 // Morada postal: Av. de Berna, 26 C, 1069-061 Lisboa | Portugal

Flávio Almeida é doutorado em design pela Faculdade de Arquitetura da Universidade de Lisboa. Possui criações e atuações nas áreas da música, design visual, produção audiovisual, e leciona nas áreas do design, audiovisual e cultura visual desde 2009.

Ciência ID: 6C1C-4C76-E086. Scopus Author ID: 57204758652. Email: flavio.almeida@universidadeeuropeia.pt

Morada institucional: Universidade Europeia / IADE / UNIDCOM. Av. Dom Carlos i 4, 1200-649 Lisboa

Notas

[1] E.g. Tech growth and communities: What’s good for tech companies can challenge a community - from Today in Technology: The top 10 tech issues for 2019. Retrieved from https://blogs.microsoft.com/on-the-issues/2019/01/08/today-in-technology-the-top-10-tech-issues-for-2019/

[2] Leap Motion (2010). Retrieved from https://www.leapmotion.com/;

[3] Captioning on Glass | Google Glass. (2014). Retrieved from http://cog.gatech.edu/.

[4] Epson Moverio BT-300 (2016). Retrieved from https://www.epson.it/products/see-through-mobile-viewer/moverio-bt-300;

[5] Smart Caption Glasses | National Theatre of London. (2018). Retrieved from https://www.nationaltheatre.org.uk/your-visit/access/caption-glasses;

[6] Signglasses | Brigham Young University. (2014). Retrieved from https://science360.gov/obj/video/3996a8a2-f726-4d2e-bba5-17f8bac691fc/signglasses.

[7] Fantasia Express: an AR storytelling on trains | Immersive Storylab. (2019). Retrieved from http://immersivestorylab.com/portfolio/fantasia-express/;

[8] The Odyssey: an AR storytelling on boats | Immersive Storylab (2019). Retrieved from http://immersivestorylab.com/portfolio/the-oddysey-ar-storytelling-on-boats/;

[9] Secret Coast: Augmented Reality Storytelling | Immersive Storylab (2018). Retrieved from http://immersivestorylab.com/portfolio/secretcoast/;

[10] Wonderscope | Within. (2018). Retrieved from https://wonderscope.com/.

[11] Story Scopes | Immersive Storylab. (2019). Retrieved from http://immersivestorylab.com/portfolio/storyscopes/.

[12] 110 Stories - What’s your Story? | Brian August. (2011). Retrieved from http://www.110stories.com/.

[13] This concept project, in collaboration with IT People Company (https://itpeopleinnovation.com/), was developed in 2019, by an Erasmus student, as part of a class about Digital Interfaces from MSc Visual Culture from IADE - Faculty of Design, Communication and Technology from European University;

[14] Technique that illustrates fictitious users guiding, the decision about interactions and features of a system (Lidwell et al, 2013).